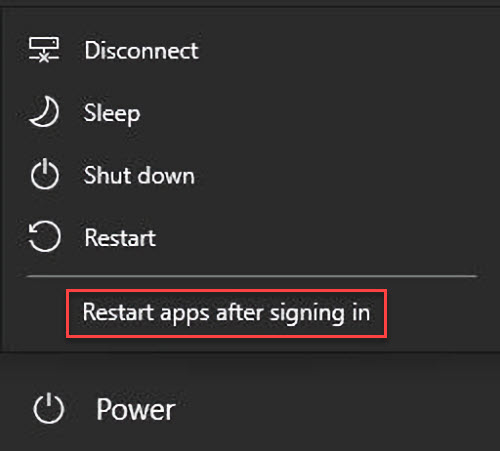

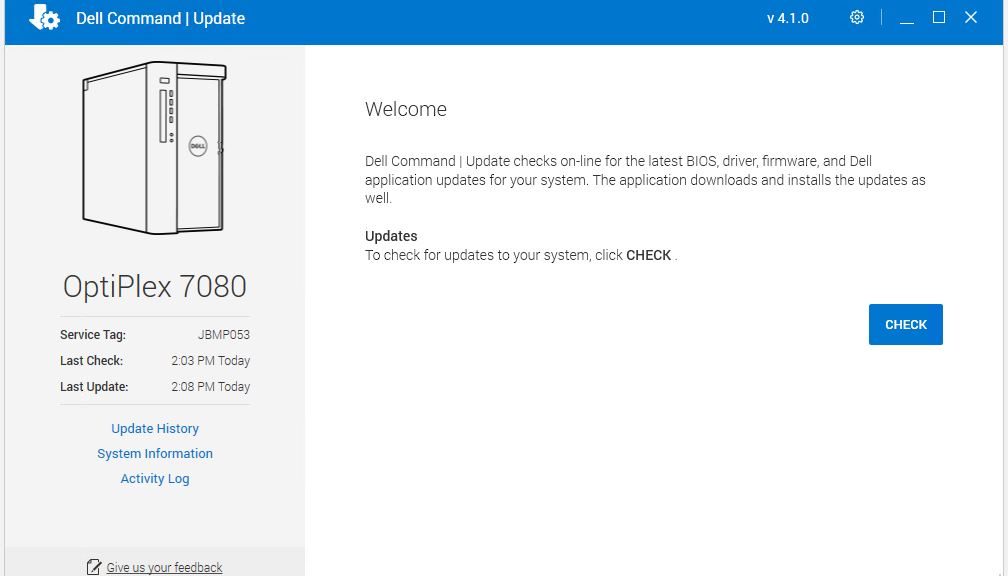

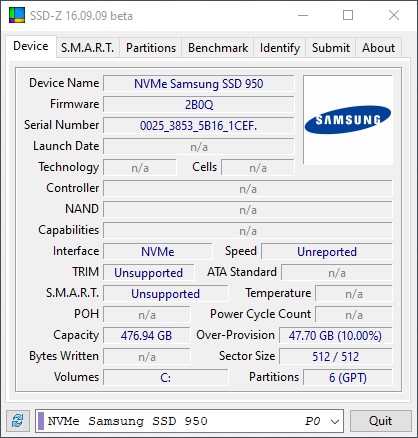

Just yesterday, a new Dev Channel Build arrived for Insiders. Among the many items mentioned in the 21359 announcement comes an interesting new Restart item in Start → Power. Shown boxed in red in the lead-in graphic here, it reads “Restart apps after signing in.” This new 21359 Power option restarts apps after it restarts the OS, to put things back as they were.

Why New 21359 Power Option Restarts Apps?

This has sometimes happened in earlier Insider Previews, but not at the user’s behest. One can one suppose that enough users provided feedback that this feature might be nice. But it’s definitely something that users will want to choose (or not) as circumstances dictate.

Thus, for example, when I’m troubleshooting or getting ready to install new hardware or a new app, I’d much prefer to restart without all the “stuff” currently occupying my desktop. OTOH, if I’m restarting after an update or to incorporate a new driver, I’d just as soon go back to whatever I was doing beforehand.

This new option lets users pick a restart scenario. The old, plain Restart brings no apps back. The new “Restart apps after signing in” restores current desktop state. Both have their uses, so I must approve and endorse this change.

NOTE: New Setting Is a Toggle

One more thing: this new item is actually a toggle. If you choose it and use it, the checkbox remains on. Thus, if you don’t want to use it the next time you restart, you must uncheck the item to go back to the prior status quo. Don’t forget! Especially if you don’t want this setting to become your default Restart behavior.