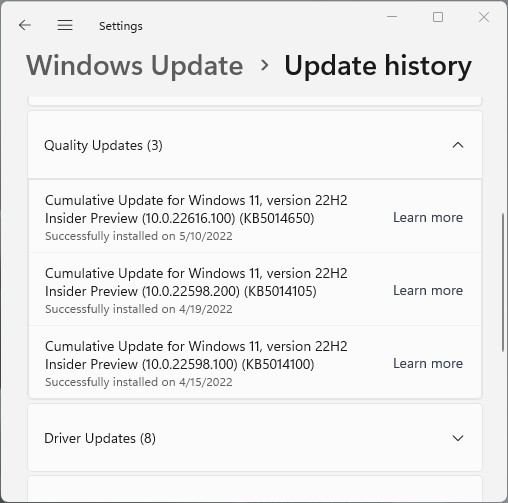

I’m still finding my way into the “new regime” for the Dev Channel in Windows 11. A couple of days ago, MS dropped Build 25120 (here’s that announcement). I’ve been playing catch-up ever since, with a number of interesting issues popping up. In true Whack-a-Mole style, I’ve been able to fix everything so far. But no sooner do I whack one than another pops up, which is why I assert that Build 25120 Shenanigans continue. Let me explain…

Which Build 25120 Shenanigans Continue?

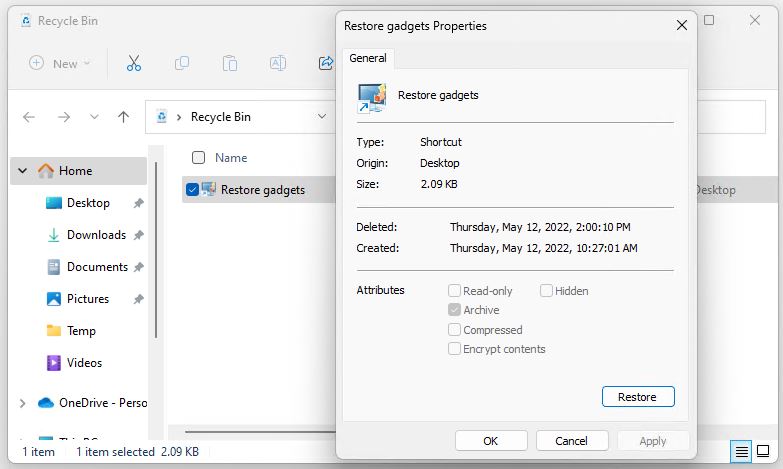

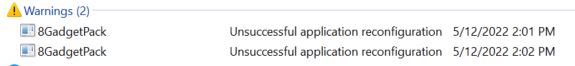

Yesterday, I described issues with the Thunderbolt dock and its attached peripherals (unplug/re-plug fixed that one). I also described how 8GadgetPack disappeared, and required a re-install AND a repair for a successful resuscitation.

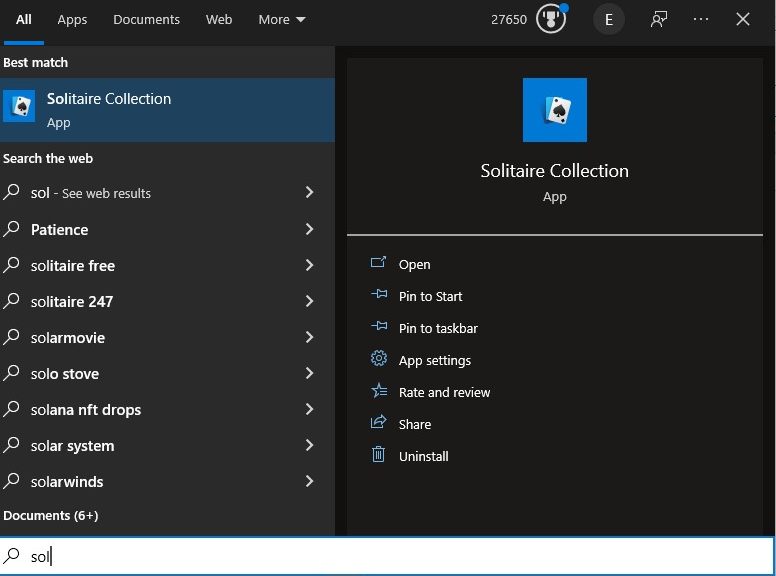

Later that afternoon, I ran into an interesting gotcha, described in this Twitter thread “Dude! Where’s my Control Panel?” Suffice it to say that I couldn’t search inside the start menu, which proved hugely frustrating. A DISM restorehealth operation and a File Explorer restart set things back to rights for that one. FWIW, I also ran SFC /scannow but it found nothing in need of repair.

I can now say with confidence, based on recent, painful personal experience, that typing program names into the Start Menu search box beats the pants off figuring out and using command line equivalents! Until I fixed that glitch, I was NOT a happy camper.

What’s Next?

I can’t say for sure, but I’m pretty sure I’ll keep finding and fixing stuff as the series of Dev Channel releases find their way onto my test PCs and VMs. I’m getting a good education on minor glitches and gotchas and their associated fixes, that’s for sure. All I can say to MS and the WindowsInsiders team is: “Keep them moles a’comin.” I’ll keep whackin’ ’em as they pop up, for sure!