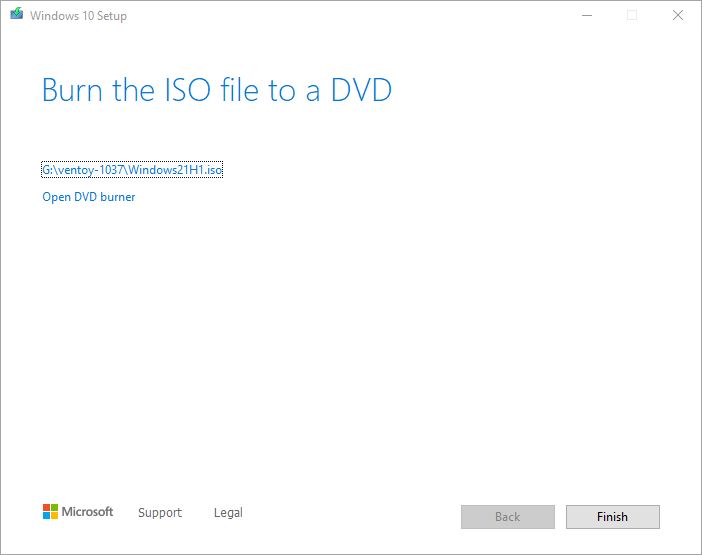

Holy moly! MS is really on the ball this time. I just started updating a select few of my 20H2 PCs to 21H1 through the enablement package yesterday. Today, 21H1 ISO files are download-ready. I used the Media Creation Tool (MCT) to grab a copy, straight from the Download Windows 10 page. You can see the resulting final screen from MCT as this story’s lead-in graphic.

Because 21H1 ISO Files Are Download-ready, You Can Grab One

There’s another story on this same topic at Windows Latest that explains how to reset the user agent in Chrome to do a direct download. It will come from the Microsoft Software Download facility (same thing that UUPdump.net and HeiDoc.net use for their far-ranging download tools). As for me, MCT is easy and straightforward enough to use that I just jumped on it instead.

Here are some timings from my personal experience, using my nominal GbE Internet connection from Spectrum at Chez Tittel (all times are approximate, not stopwatch level):

Download time: 2:00

Verify download: 0:20

Create ISO media: 1:15

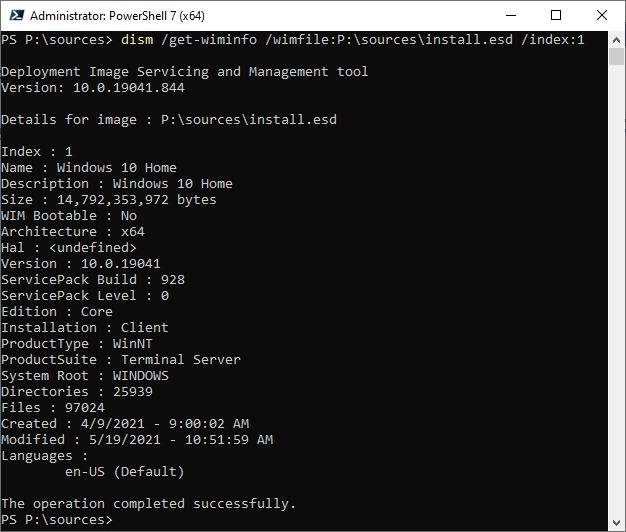

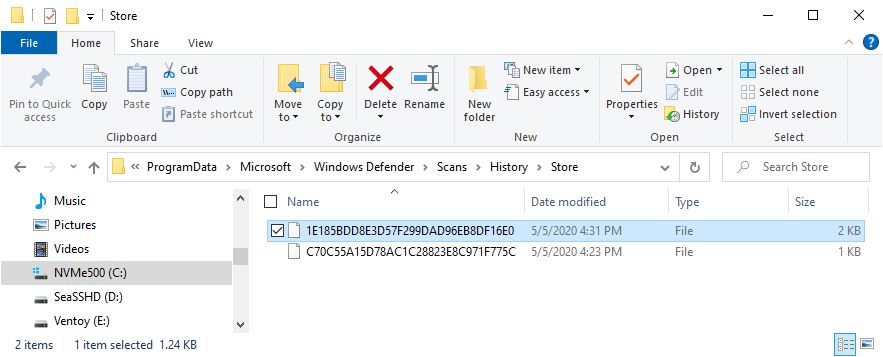

Thus, the whole thing took under 4:00 to complete. The file itself is 4.24 GB in size. It mounts in File Explorer with a volume name of ESD-ISO. And sure enough, the Sources directory includes an install.esd file not install.wim. Here’s what DISM tells me about that file, by way of inspection. (Note: Windows 10 Home usually shows up as Index 1 in most multi-part image files. This is NOT a surprise.)

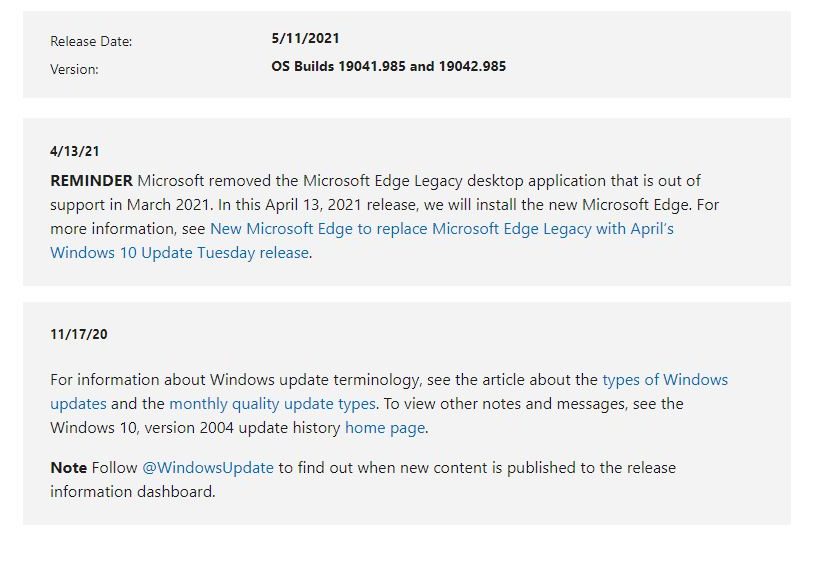

Note the version shows up as 19041.928.

[Click image for full-sized view.]

Just for the record, here’s what I found in that image file for other indexes until they stopped working:

2: Windows 10 Home N

3: Windows 10 Home Single Language

4: Windows 10 Education

5: Windows 10 Education N

6: Windows 10 Pro

7: Windows 10 Pro N

A value of 8 returned an error message, so I assume that means that 1-7 are the only legal indices. An interesting collection, to be sure! Check it out for yourself, if you need a 21H1 ISO from this list. As you can tell from the destination directory, I’ll be copying it onto my Ventoy drive sooner, rather than later. Cheers!