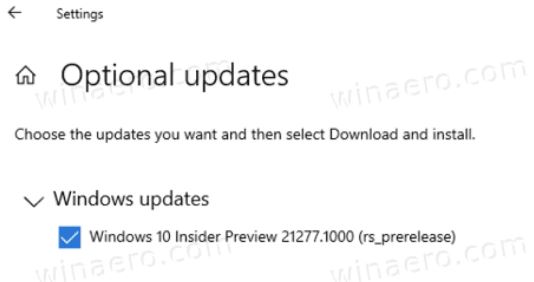

OK, then. Yesterday evening, after finishing work for the day, I used Laplink PC Mover professional to migrate the Windows 10 installation from the old Jetway mini-ITX PC to the new Dell Optiplex 7080 Micro I’ve been writing about so much lately. I’m pleased to say that, on this latest attempt, the program did what its makers claim it does with reasonably dispatch and facility. I had made an earlier attempt to use it about 10 days ago that failed. I now suspect it had something to do with a pending and incomplete update on the mini-ITX PC. Thus, I must rate my recent Laplink PCmover experience as “mostly positive.”

Preparing for Recent LapLink PCmover Experience

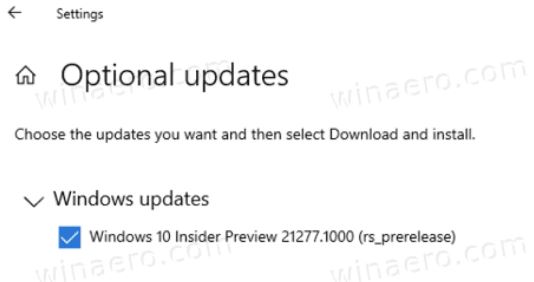

Before I got started on the transfer, I made sure both source and target PCs were completely updated. I also used Macrium Reflect to create an image backup of each PC, and made sure I had the Macrium Rescue Media at my disposal, in case I needed to roll back either or both machines. I also cleaned up the filesystems on both machines using Disk Cleanup, UnCleaner, and manual file deletions from the user account subfolders Documents and Downloads. With all that behind me, I was ready to go.

Working Through Recent LapLink PCmover Experience

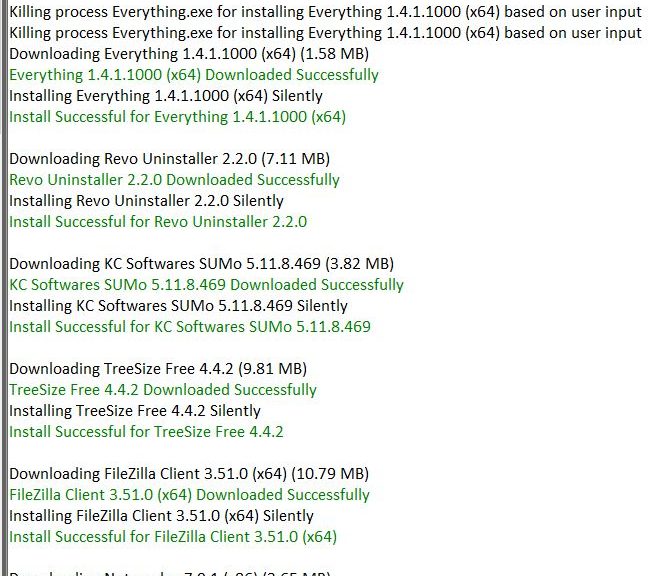

As is often the case, the prep work took longer than the transfer. With the source PC upstairs and the target PC in my office, connected via my wired in-home GbE, the whole transfer involved 2.3 GB of data across 48K-plus folders and just under 8K files. along with a merge from source to target registry of 82K-plus values just over 9 MB in size.

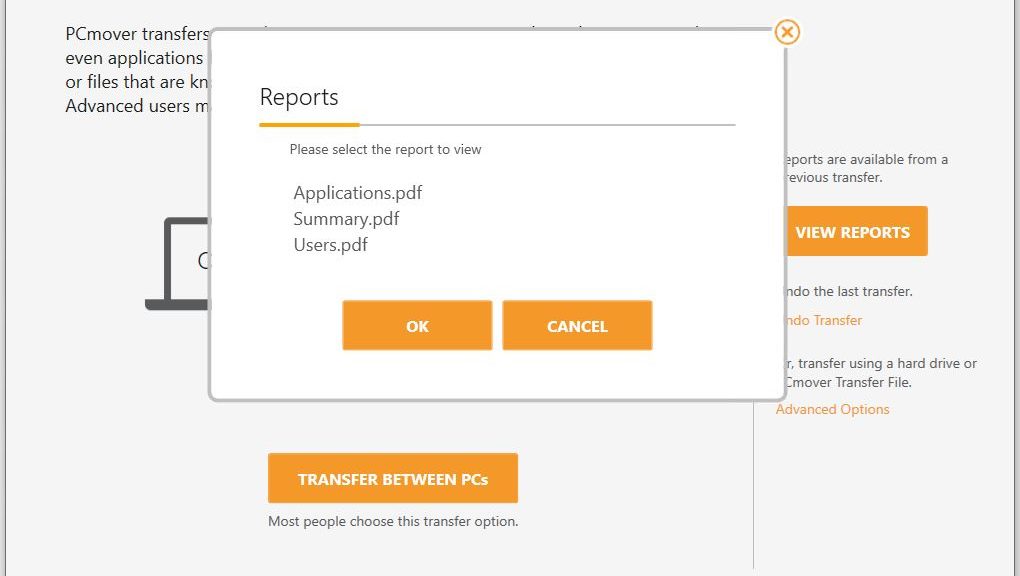

To my surprise, the whole process took just over 11 minutes, according to the program’s Summary.pdf report file. I’ve used Laplink’s PCmover before and I don’t ever remember things going that fast. Best guess: this machine has a relatively small number of applications installed and no huge file holdings under the various user account folders. Thus, it stands to reason that it wouldn’t take too long to migrate from one machine to the other. I was able to confirm that licensed applications from the source PC did work on the target after the process concluded, though I did have to provide (or re-activate) licenses before I could use those programs.

Benefits of LapLink PCmover Experience

I paid full retail for the software last December (I purchased it just over a year ago on December 6, 2019 and the price hasn’t budged since then). It cost me $42.45 ($39.99 MSRP plus $2.50 sales tax).

The obvious benefits of using PCmover are speed, ease of migration, and convenience. I’ve used this tool before, and I imagine I’ll use it again. I could have turned to the Microsoft User State Migration Tool (aka USMT) but here’s what its DOCs file says about that tool’s limitations:

USMT is intended for administrators who are performing large-scale automated deployments. If you are only migrating the user states of a few computers, you can use PCmover Express. PCmover Express is a tool created by Microsoft’s partner, Laplink.

I used the Pro rather than the Express version of PCmover. Express is free, but only for non-commercial use. Because I’m writing about it for publication I naturally chose the for-a-fee version. The Express version does most of what the Professional version did for me, but does not transfer applications, permit image based migration, or copy hard disk contents from source to target PC.

Where LapLink PCmover Experience Fell Short

I did have a little bit of cleanup to do after the migration process concluded. PCmover does not, as I discovered, move all browser settings and preferences from source to target PC. Thus, I had to go in and install some extensions, and change default home page settings in Chrome, Edge and Firefox. It did move favorites/bookmarks, though, as far as I could tell.

All in all, it was a positive experience and resulted in a running PC that worked properly with 99+% of user, account, preference, settings, and files from the old machine ready, available and working on the target PC. As far as I’m concerned the US$40-odd PCmover cost me was worth it, and produced the desired outcome. ‘Nuff said.